Category: Technology

Benefits of Integrating Legacy Systems with Modern Applications

In a rapidly evolving business environment, the integration of legacy systems with modern applications is not just a strategic choice—it’s a necessity for organizations aiming to grow in the digital age. While integrating these systems brings challenges, the benefits overshadow the challenges.

Introduction to Integration

Integration in software systems refers to the process of connecting different applications and technologies to work together seamlessly. When we talk about integrating legacy systems with modern applications, we’re aiming to bridge the gap between older, often outdated systems, and the innovative solutions driving businesses today.

Businesses that fail to integrate their legacy systems with modern applications risk falling behind their competitors. Integration allows organizations to use the power of their existing systems while incorporating the flexibility and capabilities of newer technologies.

Let’s dive into the advantages that businesses can realize through effective integration.

- Operational Efficiency- Integrating legacy systems with modern applications boosts operational efficiency by automating tasks, reducing errors, and optimizing resource use.

Imagine a hospital using a legacy system for patient scheduling. By integrating this system with a modern appointment booking application, they automate scheduling, reduce errors, and use staff time more efficiently.

- Data Accessibility and Insights- Integration breaks down data silos, providing real-time access to comprehensive data for informed decision-making and strategic planning. In healthcare, integrating systems allow doctors to access patient records seamlessly across departments. This integration provides real-time data for better diagnosis and treatment decisions.

- Enhanced Customer Experience- Integration enables personalized services and faster responses, leading to increased customer satisfaction, loyalty, and business growth. Patients benefit from integrated systems through personalized care plans based on their medical history and preferences. Faster response times and tailored services enhance overall satisfaction.

- Agility and Adaptability- Integrating systems enhances business agility, allowing organizations to innovate faster and respond swiftly to market changes. Integrated systems enable healthcare providers to adapt quickly to new regulations or treatment protocols, ensuring they stay responsive to changing patient needs.

- Cost Savings and ROI- Despite initial costs, integration reduces operational expenses, boosts revenue, and provides measurable returns over time. Despite integration costs, hospitals save money by streamlining processes. Reduced errors and improved patient outcomes lead to lower long-term costs and higher returns on investment.

- Improved Collaboration and Communication- Integration fosters seamless collaboration across teams, improving decision-making and productivity. Integrated systems allow healthcare teams to share patient information securely and collaborate efficiently on treatment plans, leading to better patient care.

- Enhanced Business Intelligence and Analytics- Integrated systems empower organizations with advanced analytics, enabling data-driven growth and innovation. By integrating data from different departments, healthcare organizations gain insights for improving services, predicting patient trends, and optimizing resource allocation.

- Regulatory Compliance and Risk Management- Integration helps ensure compliance, automates reporting, and strengthens risk management practices. Integrated systems help healthcare providers meet regulatory requirements by automating compliance checks and minimizing risks associated with data security.

- Streamlined Customer Relationship Management (CRM)- Integration provides a unified view of customer data, improving CRM practices and customer relationships. Integrated systems in healthcare unify patient information from various touchpoints, allowing providers to offer personalized care and anticipate patient needs effectively.

- Reduced IT Complexity and Technical Debt- Integration modernizes IT infrastructure, reducing complexity and technical debt by adopting scalable solutions. Healthcare organizations simplify their IT infrastructure by integrating systems, retiring outdated applications, and adopting scalable solutions that support future needs.

- Employee Satisfaction and Retention- Integrated systems improve employee satisfaction by eliminating manual tasks and providing modern tools. Healthcare staff benefit from integrated systems by spending less time on administrative tasks and more time on patient care, leading to higher job satisfaction and retention rates.

Embracing the Power of Integration

In summary, the benefits of integrating legacy systems with modern applications extend far beyond technical improvements—they directly impact business performance and competitiveness. From operational efficiency and data accessibility to enhanced customer experience and cost savings, integration empowers organizations to unlock new opportunities and navigate digital transformation with confidence.

Are you ready to harness the power of integration for your business? Reach out to experts like ProweSolution to embark on your integration journey and experience the transformative benefits firsthand. Together, leverage technology to drive efficiency, innovation, and growth in your organization.

Ethical Considerations in Health Tech: Balancing Innovation with Data Privacy

Understanding Health Tech

The integration of technology into healthcare, commonly referred to as health tech, has brought about revolutionary changes in patient care and medical research. However, this rapid innovation raises significant ethical concerns, particularly regarding data privacy.

Understanding Ethical Considerations in Health Tech

Ethical considerations in health tech includes a range of issues, including data privacy, consent, transparency, and fairness. Ensuring patient data is protected and used responsibly is fundamental to building and maintaining public trust. Ethical practices not only safeguard patient rights but also contribute to the credibility and reputation of health tech companies.

Data Security

Data security is crucial in health tech due to rising electronic health records (EHRs) and health information exchanges (HIEs), increasing data breach risks. Breaches can cause identity theft, insurance fraud, and compromised patient care. Cyberattacks in healthcare grew.

Informed Consent

Informed consent is vital in healthcare, ensuring patients understand the risks and benefits of medical procedures or research. In health tech, it includes informing patients about data collection, its purpose, and who accesses it.

Transparency in AI Algorithms

AI in healthcare offers better diagnostics and treatments, but transparency in AI algorithms is essential for trust. Patients and providers need to grasp AI decision-making processes. The GDPR allows patients to request explanations for AI decisions.

Recommendations:

To achieve a balance between innovation and data privacy in health tech, the following recommendations are proposed:

- Ensure Regulatory Compliance: Stay updated with health tech regulations to ensure products meet legal standards.

- Data Minimization: Implement practices to collect only necessary data for specific purposes, minimizing the amount of personal data stored and processed.

- User Education and Training: Provide ongoing education and training to healthcare professionals and patients about data privacy best practices and the importance of safeguarding personal health information.

- Secure Data Sharing Protocols: Establish secure protocols for sharing data with authorized entities, ensuring that data transfers are encrypted, and access controls are strictly enforced.

- Incident Response Planning: Develop and regularly update incident response plans to quickly and effectively address data breaches or security incidents, minimizing their impact on patients and operations.

- Third-Party Vendor Oversight: Implement rigorous oversight and due diligence processes for third-party vendors and partners handling health data to ensure they meet stringent data privacy and security standards.

- Continuous Risk Assessment: Conduct regular risk assessments to identify new threats and vulnerabilities, adapting security measures accordingly to protect against emerging risks.

- Patient Access and Control: Provide patients with easy access to their health data and empower them with control over how their data is used and shared, promoting patient autonomy and trust.

- Cross-sector Collaboration: Foster collaboration between health tech innovators, regulators, policymakers, and privacy advocates to develop comprehensive frameworks that balance innovation with robust data protection.

- Anonymization and De-identification: Utilize robust techniques to anonymize or de-identify data to protect patient identities while still allowing for meaningful analysis and innovation.

Conclusion

In conclusion, while health tech innovation holds immense potential, it must not come at the cost of compromising patient data privacy. Responsible innovation not only protects patient privacy but also fosters trust and credibility. Ethical considerations must guide the development and implementation of health tech solutions to ensure that patient welfare remains at the forefront of this digital revolution.

Utilizing Power BI’s Forecasting Features for Business Forecasting

Introduction

Accurate business forecasting is the compass that guides successful decision-making in today’s dynamic marketplace. With Power BI’s forecasting features, businesses can understand future trends with confidence, turning data into actionable insights.

Forecasting plays an important role in business planning and strategy. Traditionally, organizations relied on manual methods and basic statistical tools for forecasting, which often led to inaccuracies and missed opportunities. Advanced analytics tools like Power BI have revolutionized this landscape, offering powerful features that enable businesses to make more accurate and insightful predictions.

Introduction to Power BI’s Forecasting Features:

Power BI’s forecasting capabilities encompass time series forecasting, predictive analytics, and custom forecasting options. These features enable businesses to analyze historical data, uncover patterns, and make informed predictions about future trends.

Benefits of Using Power BI for Business Forecasting:

- Improved Accuracy and Continuous Learning: Enhanced predictive models lead to more precise forecasts, and Power BI’s machine learning capabilities enable continuous learning from new data, improving forecast accuracy over time.

- Flexibility and Custom Alerts: Customize forecasting methods to suit specific business needs and set up custom alerts based on forecasting models to notify stakeholders of significant changes or anomalies.

- Scalability and Mobile Accessibility: Power BI can handle large datasets effortlessly, and accessing forecasts and insights on-the-go through Power BI’s mobile app empowers decision-makers to stay informed.

- Visualization and Collaboration: Intuitive visualizations make it easier to interpret and communicate forecasts, and with Power BI’s collaborative features, teams can share dashboards, reports, and insights in real-time.

- Data Integration and Historical Analysis: Power BI’s seamless integration with various data sources allows for a holistic view of business operations. This integration, coupled with the ability to analyze historical data alongside forecasts, provides context and helps businesses understand trends.

- Cost Efficiency and Automation: Power BI’s automation capabilities streamline repetitive forecasting tasks, reducing manual errors. This efficiency not only saves time but also leads to cost savings by optimizing inventory levels, reducing wastage, and identifying cost-saving opportunities based on predictive insights.

- Compliance, Security, and Custom Alerts: Power BI offers robust security features and compliance certifications, ensuring that sensitive business data is protected. Setting up custom alerts based on forecasting models can notify stakeholders of significant changes or anomalies, enabling proactive decision-making and risk mitigation.

Practical Tips and Best Practices: To make the most of Power BI’s forecasting features:

- Data Preparation: Ensure data is clean, consistent, and relevant.

- Model Selection: Choose the right forecasting model based on your data type and business goals.

- Parameter Tuning: Fine-tune model parameters for optimal performance.

- Interpreting Results: Understand forecast outputs to derive actionable insights.

Challenges:

- Data Quality: Regularly audit and clean data to maintain accuracy.

- Model Accuracy: Continuously validate and refine forecasting models.

- Interpretation: Invest in training to understand and interpret forecast results effectively.

Conclusion:

Power BI’s forecasting features hold immense potential for businesses aiming to enhance their forecasting accuracy and strategic decision-making. As you navigate the world of business forecasting, remember to harness the power of Power BI to unlock valuable insights.

Explore its capabilities, experiment with its features, and Follow ProweSolution Private Limited on LinkedIn to share your experiences and insights. Embrace the future of forecasting with Power BI!

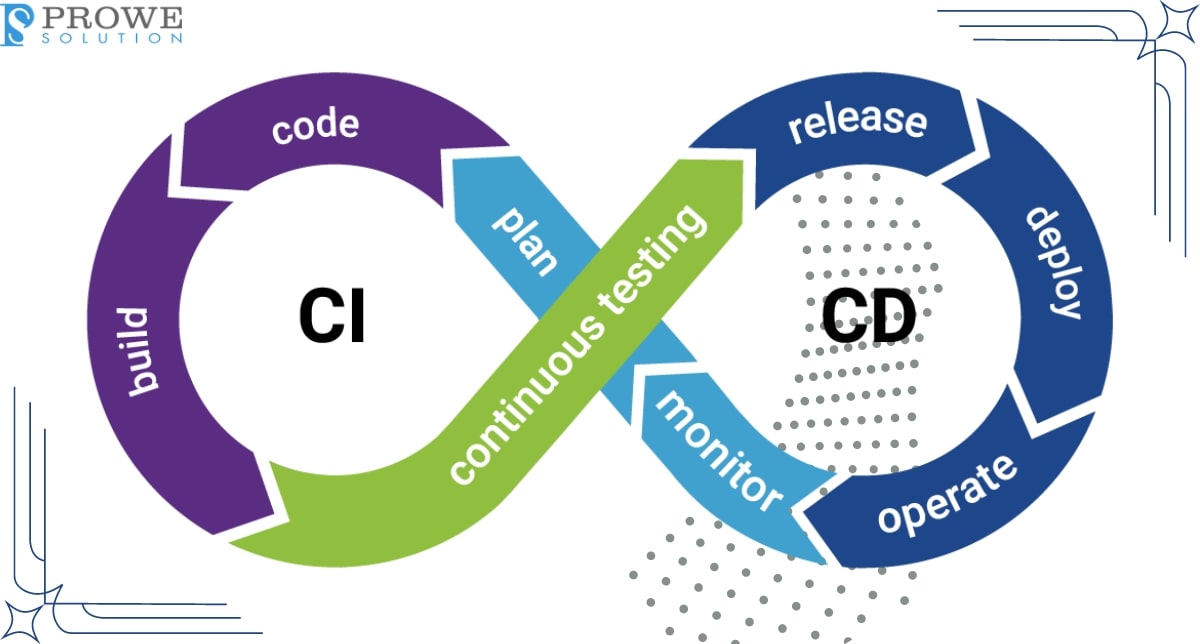

Mastering Continuous Integration And Deployment (CI/CD) In The Cloud:Best Practices For Agile Development!

Are you ready to revolutionize your software development process?

How can you ensure faster releases without compromising quality? The answer lies in Continuous Integration and Deployment (CI/CD) practices. In this article, we’ll explore how CI/CD in the cloud can supercharge your development lifecycle and propel your team towards success.

Embrace Automation:

Automation lies at the heart of CI/CD. Automate the entire software delivery pipeline, from code integration and testing to deployment and monitoring. By automating repetitive tasks, teams can minimize human errors, reduce cycle times, and increase efficiency.

Build a Scalable Infrastructure:

The cloud offers unparalleled scalability and flexibility, making it an ideal environment for CI/CD implementations. Leverage cloud services such as AWS CodePipeline, Azure DevOps, or Google Cloud Build to build scalable and resilient CI/CD pipelines that can adapt to changing workloads and requirements.

Adopt Infrastructure as Code (IaC):

Infrastructure as Code (IaC) enables teams to define and manage infrastructure using code. By treating infrastructure as code, changes can be version-controlled, tested, and deployed alongside application code, ensuring consistency and reproducibility across environments.

Implement Automated Testing:

Automated testing is a cornerstone of CI/CD. Implement unit tests, integration tests, and end-to-end tests to validate code changes and catch bugs early in the development process. Integrate testing tools such as JUnit, Selenium, or Jest into your CI/CD pipeline to automate the testing process and ensure the reliability of your applications.

Foster Collaboration and Communication:

CI/CD encourages collaboration and communication among development, operations, and quality assurance teams. Foster a culture of collaboration by breaking down silos, promoting transparency, and encouraging feedback loops. Tools such as Slack, Microsoft Teams, or Atlassian Jira can facilitate communication and collaboration across distributed teams.

Monitor and Measure Performance:

Continuous monitoring is essential for identifying issues, optimizing performance, and ensuring the reliability of applications in production. Implement monitoring and logging solutions such as Prometheus, Grafana, or ELK Stack to track key metrics, detect anomalies, and troubleshoot issues in real-time.

Iterate and Improve:

Continuous improvement is at the core of CI/CD. Encourage a culture of experimentation, iteration, and learning within your team. Collect feedback from stakeholders, analyze metrics, and iterate on your CI/CD pipeline to drive continuous improvement and innovation.

Conclusion:

Mastering CI/CD in the cloud demands best practices, automation, and collaboration. By embracing these principles, teams accelerate development, enhance software quality, and deliver value swiftly. Embrace the cloud, automate rigorously, and iterate incessantly to outpace competitors.

How Azure Data Factory Benefits Integration?

In today’s fast-paced digital landscape, data has become the lifeblood of businesses, driving decision-making, powering insights, and fuelling innovation. However, with data stored in disparate sources and formats, integrating, and managing this wealth of information can pose significant challenges for organizations. Enter Azure Data Factory – Microsoft’s cloud-based data integration service designed to simplify and streamline the process of bringing together data from diverse sources, transforming it into actionable insights, and driving business value. In this article, we’ll explore how Azure Data Factory works, its key benefits, and how businesses can leverage it to enhance their data integration capabilities.

Understanding Azure Data Factory:

At its core, Azure Data Factory serves as a fully managed data integration service that enables organizations to create, orchestrate, and manage data pipelines at scale. These data pipelines facilitate the movement and transformation of data from various sources to destinations, such as data lakes, data warehouses, and analytics platforms. With Azure Data Factory, users can easily construct complex data workflows using a graphical interface, eliminating the need for extensive coding and scripting.

Key Benefits of Azure Data Factory:

Unified Data Integration Platform: Azure Data Factory provides a unified platform for designing, deploying, and monitoring data integration workflows. Its intuitive interface allows users to visually design pipelines, making it accessible to both technical and non-technical users alike.

Hybrid Data Integration: Azure Data Factory seamlessly integrates with both on-premises and cloud-based data sources, providing organizations with the flexibility to leverage their existing infrastructure investments while taking advantage of the scalability and agility of the cloud.

Automated Data Orchestration: Automation is at the heart of Azure Data Factory. Users can schedule and automate the execution of data pipelines, ensuring that data is processed and delivered in a timely manner without manual intervention.

Advanced Data Transformation: With built-in data transformation capabilities, Azure Data Factory enables users to cleanse, enrich, and transform data as it moves through the integration pipeline. This ensures that data is standardized, cleansed, and ready for analysis.

Integration with Azure Services: Azure Data Factory seamlessly integrates with a wide range of Azure services, including Azure Synapse Analytics, Azure Databricks, and Azure SQL Database. This tight integration allows organizations to build end-to-end data solutions that span across different Azure services.

Scalability and Cost-Effectiveness: Azure Data Factory is designed to scale with the needs of your organization, enabling you to handle large volumes of data and accommodate growing workloads. Additionally, its pay-as-you-go pricing model ensures cost efficiency, allowing organizations to pay only for the resources they consume.

Leveraging Azure Data Factory for Business Success

With its powerful features and capabilities, Azure Data Factory empowers organizations to overcome the challenges associated with data integration and harness the full potential of their data assets. Whether you’re migrating data to the cloud, building data warehouses, or implementing data-driven analytics solutions, Azure Data Factory provides the tools and capabilities you need to succeed in today’s data-driven world.

In conclusion, Azure Data Factory represents a game-changer in the realm of data integration, offering organizations a comprehensive and scalable solution for managing their data workflows. By leveraging Azure Data Factory, businesses can streamline their data integration processes, drive operational efficiencies, and unlock actionable insights that drive business growth and innovation.

For further information visit us https://prowesolution.com/

#innovation #Saas #azure #integration #startup #prowesolution

The Intersection of Generative AI and Cybersecurity

All You Need to Know

Generative AI is growing fast and meeting cybersecurity in new and sometimes tricky ways. While it can do amazing things, it also brings new problems we need to deal with.

What is Generative AI?

Generative AI is a part of artificial intelligence that makes new data based on existing information. People use it to make art, music, and even help find new medicines. In cybersecurity, it helps spot threats, understand data, and automate some security tasks. But it also brings up worries about AI-made cyber threats and how people might misuse it.

Why Should You Care?

Knowing how generative AI and cybersecurity mix is super important for people who work in this area. It helps them keep up with new cyber threats, set up good security, and keep important data and systems safe.

How Generative AI Can Support Cybersecurity Efforts:

- Scenario-driven cybersecurity training: Generative AI can create simulated cyberattacks and situations. This helps train people to respond well when real attacks happen.

- Synthetic data generation: Generative AI can make fake but realistic data. This is useful for testing AI tools and making software without using real personal information, keeping it safe.

- Contextualized security monitoring: Using generative AI, security teams can find weak spots and offer specific ways to fix them, making security more effective.

- Supply chain risk management: Generative AI helps in predicting when equipment might break, spotting fraud, and managing business relationships better.

- Threat intelligence and hunting: Generative AI can go through lots of data to find weak points and suggest how to make security better.

- Digital forensics: After an attack, generative AI can help experts look at what happened to find out how attackers got in and what they did.

- Automated patch management: Generative AI can make applying updates to software easier and more efficient, making systems safer.

- Phishing detection: Generative AI can spot signs of phishing attacks, like strange language or bad links, helping to stop them before they cause harm.

What Generative AI Does in Cybersecurity:

Generative AI uses special algorithms to create content. This is useful in cybersecurity because it helps find strange things in big data sets and can do some security jobs automatically. For example, it can spot weird internet traffic that might mean a cyberattack is happening.

The Problems from Generative AI:

Generative AI can also make new cyber threats. AI-made phishing emails can look just like real emails and trick people into giving away secrets. Deepfakes are realistic fake videos or audio that can fool people or change what they think. Also, some tricks aim to confuse AI systems by giving them fake data.

How to Fight Back:

To protect against these new threats, companies should use several security steps. Training people to spot AI-made phishing emails is a must. They also need tools that can find deepfakes and spot fake data. It’s also important to use AI in a fair way, keep an eye on things, and keep updating security plans.

Problems and Fixes:

Companies might find it hard to tell if something was made by AI or to keep up with new ways AI attacks. Keeping AI safe from fake data is also a big worry. To fix these, companies should train people in AI security, use AI to defend against attacks, and work with AI experts to make systems stronger.

Conclusion:

The mix of generative AI and cybersecurity has good and bad sides. Being careful, ready, and always learning is the best way to handle it. Stay updated, use the best methods, and work with experts to handle this new mix of tech and security.

MuleSoft: Orchestrating Seamless Integration in Today’s Connected World

In today’s hyper-connected digital landscape, businesses face the challenge of integrating various systems, applications, and data sources to ensure smooth operations and deliver exceptional customer experiences. This is where MuleSoft, a leading integration platform, comes into play, empowering organizations to orchestrate seamless integration in the modern connected world.

Understanding MuleSoft

MuleSoft, part of the Salesforce ecosystem, provides a comprehensive integration platform that enables businesses to connect applications, data, and devices seamlessly, regardless of where they reside—on-premises, in the cloud, or across hybrid environments. At its core, MuleSoft’s Anypoint Platform offers a unified solution for API-led connectivity, application integration, and data synchronization.

MuleSoft’s Anypoint Platform consists of several key components, including:

- Anypoint Studio: A graphical design environment for building integration flows using MuleSoft’s drag-and-drop interface.

- Anypoint Exchange: A centralized repository for discovering and sharing reusable integration assets, such as APIs, connectors, templates, and examples.

- Anypoint Design Center: A collaborative environment for designing APIs, data mappings, and process flows, enabling teams to work together seamlessly.

- Anypoint Management Center: A centralized console for managing and monitoring integration applications, APIs, and runtime environments.

Benefits of MuleSoft Integration

1.Enhanced Connectivity: MuleSoft facilitates connectivity between disparate systems, allowing organizations to unlock siloed data and applications, streamline processes, and drive innovation. By integrating systems and applications across the enterprise, businesses can break down data silos, improve information sharing, and enable real-time collaboration.

2.Agility and Scalability: With MuleSoft, businesses can adapt to changing market demands and scale their integration initiatives effortlessly, ensuring agility and resilience in today’s dynamic business environment. MuleSoft’s lightweight runtime engine, Mule Runtime, enables organizations to deploy integration applications anywhere, whether on-premises, in the cloud, or in hybrid environments, providing flexibility and scalability.

3.Accelerated Time-to-Value: MuleSoft’s reusable integration assets, pre-built connectors, and drag-and-drop design interface empower organizations to accelerate development cycles and deliver projects faster, ultimately driving faster time-to-value. By leveraging MuleSoft’s extensive library of connectors and templates, developers can reduce development time and effort, allowing businesses to respond to market changes and customer demands more quickly.

4.Improved Customer Experiences: By integrating customer-facing systems and backend processes, businesses can deliver personalized experiences, improve service delivery, and foster customer loyalty. For example, a retail organization can use MuleSoft to integrate its e-commerce platform with its customer relationship management (CRM) system, enabling personalized recommendations, seamless order fulfilment, and proactive customer support.

5.Data-driven Insights: MuleSoft enables organizations to aggregate and analyse data from disparate sources, providing valuable insights that drive informed decision-making and fuel business growth. By integrating data from various systems and applications, businesses can gain a holistic view of their operations, identify trends and patterns, and uncover opportunities for optimization and innovation.

Real-World Applications

Numerous organizations across industries have leveraged MuleSoft to transform their integration capabilities and achieve tangible business outcomes. For example, a global retailer utilized MuleSoft to integrate its e-commerce platform with its inventory management system, enabling real-time inventory updates, improving order fulfilment, and enhancing the overall customer experience. Similarly, a financial services firm used MuleSoft to integrate its core banking systems with its customer-facing applications, enabling seamless account management, personalized financial advice, and secure transactions.

Best Practices for MuleSoft Integration

1. Define Clear Integration Objectives: Before embarking on an integration project, it’s essential to clearly define your integration goals and prioritize initiatives that align with your business objectives. Whether you’re aiming to improve operational efficiency, enhance customer experiences, or drive innovation, having a clear understanding of your integration objectives will guide your implementation strategy and ensure successful outcomes.

2. Adopt API-led Connectivity: Embrace an API-led approach to integration, designing reusable APIs that encapsulate backend services and promote agility and scalability. By exposing business capabilities as APIs, you can decouple systems and applications, enabling them to communicate with each other in a standardized and efficient manner. MuleSoft’s Anypoint Platform provides comprehensive support for API-led connectivity, offering tools and capabilities for designing, building, managing, and securing APIs across the enterprise.

3. Ensure Data Quality and Governance: Implement robust data governance practices to maintain data integrity, security, and compliance across integrated systems. Define clear data ownership, access controls, and quality standards to ensure that data is accurate, reliable, and consistent throughout its lifecycle. MuleSoft’s Anypoint Platform offers built-in capabilities for data governance, including data mapping, transformation, validation, and enrichment, enabling organizations to enforce data quality and governance policies across their integration initiatives.

4. Monitor and Optimize Performance: Continuously monitor the performance of your integration processes and leverage analytics to identify bottlenecks, optimize workflows, and improve efficiency. MuleSoft’s Anypoint Monitoring provides real-time visibility into integration applications, APIs, and runtime environments, allowing organizations to track key performance metrics, detect anomalies, and troubleshoot issues proactively. By monitoring and optimizing performance, businesses can ensure that their integration initiatives meet SLAs, comply with regulatory requirements, and deliver optimal outcomes.

Conclusion

In today’s interconnected world, seamless integration is essential for driving business success and delivering exceptional customer experiences. MuleSoft empowers organizations to overcome the complexities of integration, enabling them to connect systems, applications, and data sources effortlessly. By embracing MuleSoft’s integration platform and best practices, businesses can unlock new opportunities, accelerate innovation, and thrive in the digital age.

Interfacing Healthcare Systems: Strategies for Seamless Integration

In today’s rapidly evolving healthcare landscape, the seamless integration of various systems is crucial for delivering efficient and effective patient care. Interfacing healthcare systems involves connecting different software applications, devices, and databases to enable the smooth exchange of data and information. This article explores the significance of interfacing healthcare systems and presents strategies for achieving seamless integration.

Enhanced Patient Care Coordination:

The integration of electronic health records (EHRs), laboratory systems, and imaging systems allows healthcare providers to access comprehensive patient information. This enables better-informed clinical decision-making and coordinated care delivery across different healthcare settings. With all members of the care team having access to up-to-date patient data, patient outcomes and satisfaction are improved.

Streamlined Clinical Workflows:

Seamless integration streamlines clinical workflows by automating manual processes and reducing administrative burdens. For instance, integrating electronic prescribing systems with pharmacy systems enables automated medication ordering and dispensing, leading to improved medication management and adherence. Similarly, interfacing scheduling systems with billing systems optimizes appointment scheduling and enhances revenue cycle management.

Data-driven Decision Making:

Interfacing healthcare systems empowers organizations to leverage data for informed decision-making and quality improvement initiatives. By aggregating data from various sources, including clinical, operational, and financial systems, organizations can perform advanced analytics to identify trends, monitor performance metrics, and drive evidence-based practices. This data-driven approach enhances operational efficiency and strategic planning.

Adherence to Interoperability Standards:

Adhering to interoperability standards such as HL7 and FHIR ensures seamless data exchange between different healthcare applications and systems. These standards define data exchange formats and communication protocols, maintaining data security and privacy while promoting interoperability. By following these standards, healthcare organizations can achieve seamless integration and foster collaboration and information exchange.

Vendor Collaboration and Partnerships:

Collaboration with vendors and technology partners is essential for successful integration of healthcare systems. Organizations should work closely with software vendors, system integrators, and technology partners to ensure compatibility and smooth implementation of interfacing solutions. By fostering collaborative relationships, organizations can leverage expertise, resources, and support to achieve their integration goals effectively.

In conclusion, interfacing healthcare systems is essential for improving patient care coordination, streamlining clinical workflows, enabling data-driven decision-making, and promoting interoperability. By implementing strategies such as enhanced patient care coordination, streamlined workflows, adherence to interoperability standards, vendor collaboration, and user training, healthcare organizations can achieve seamless integration and unlock the full potential of interconnected healthcare IT systems.

Scaling Applications in the Cloud: Techniques for Performance Optimization

In today’s digital age, businesses are increasingly relying on cloud computing to power their applications and services. Cloud platforms offer unparalleled flexibility, scalability, and efficiency, enabling organizations to meet the demands of modern users and handle fluctuating workloads with ease. However, as applications scale up to accommodate growing user bases and increasing data volumes, ensuring optimal performance becomes paramount. In this article, we’ll explore techniques for scaling applications in the cloud and optimizing performance to deliver a seamless user experience.

1.Horizontal Scaling

Horizontal scaling, also known as scaling out, involves adding more instances of an application across multiple servers to distribute the workload evenly. This approach allows organizations to handle increased traffic and improve fault tolerance by spreading the load across a cluster of servers. Cloud platforms like AWS, Azure, and Google Cloud offer auto-scaling capabilities, allowing applications to automatically provision and de-provision resources based on demand. By leveraging horizontal scaling, businesses can ensure high availability and performance while minimizing infrastructure costs.

2.Vertical Scaling

Vertical scaling, or scaling up, involves upgrading the resources of individual servers, such as CPU, memory, and storage capacity, to accommodate increased workload demands. While vertical scaling can provide immediate performance improvements, it has limitations in terms of scalability and cost-effectiveness. Cloud providers offer scalable virtual machine instances and managed services with flexible resource configurations, enabling organizations to vertically scale their applications as needed. However, it’s essential to monitor resource utilization and adjust configurations dynamically to optimize performance and cost efficiency.

3.Load Balancing

Load balancing is a critical component of scalable architecture, distributing incoming traffic across multiple servers to ensure optimal resource utilization and prevent overloading. Cloud providers offer load balancing services that intelligently route requests to the most available and least loaded servers, enhancing reliability and performance. Additionally, advanced load balancing algorithms, such as round-robin, least connections, and weighted round-robin, allow organizations to fine-tune traffic distribution based on specific requirements. By implementing load balancing, businesses can achieve high availability, fault tolerance, and scalability for their applications.

4.Caching

Caching involves storing frequently accessed data or computations in memory to reduce latency and improve response times. Cloud platforms offer managed caching services, such as Amazon ElastiCache, Azure Cache for Redis, and Google Cloud Memorystore, which provide high-performance, scalable caching solutions. By caching static content, database queries, and computed results, organizations can alleviate pressure on backend systems, enhance scalability, and deliver faster user experiences. It’s essential to implement caching strategies carefully, considering factors like cache eviction policies, data consistency, and cache invalidation mechanisms to ensure optimal performance.

5.Database Optimization

Database performance plays a crucial role in application scalability and responsiveness. Cloud databases offer various optimization techniques, such as indexing, query optimization, partitioning, and sharding, to improve performance and scalability. Additionally, cloud providers offer managed database services with built-in scalability features, such as auto-scaling, replication, and failover, to handle growing workloads seamlessly. By optimizing database configurations, tuning queries, and leveraging caching mechanisms, organizations can enhance application performance, scalability, and reliability in the cloud.

Conclusion

Scaling applications in the cloud requires careful planning, strategic decision-making, and continuous optimization to ensure optimal performance and scalability. By adopting techniques like horizontal scaling, vertical scaling, load balancing, caching, and database optimization, organizations can effectively manage growing workloads, deliver seamless user experiences, and stay competitive in today’s digital landscape. As businesses embrace cloud computing for their applications, mastering the art of scaling becomes essential for driving innovation, accelerating growth, and achieving success in the cloud era.